I was perusing my Twitter feed and spotted a great article from Mark Tuckeron the gaps between first-party and third-party voice apps entitled: Better Business Conversations Start with Third-Party Voice Apps, But First-Party Rules Today. It triggered some old conversations and concerns I’ve had going back to the inception of my own company’s Action and Skill.

Speaking from a position as an early adopter of voice, I have been pondering how we might bridge this gap between first-party and third-party voice experiences. I often find myself explaining the nuances of the two interaction models to internal partners, especially those who are new to voice and might be trying it on their own for the first time. I recall a brief product demo I did for one of our executives who stopped by my booth at an internal tech convention back in late 2016. Realizing that skill invocations, i.e., “Hey Google, ask ”, or “Alexa, launch ”,don’t necessarily follow conventional human conversation patterns, I printed out a handful of example invocation phrases the night before and posted them next to our demo units to guide new users down the happy path. Of course this leader decided to ignore the prompts and engage the voice assistant in a way that launched a first-party experience that was completely confusing and out of context. He left the booth with the suggestion that “our demo needed to be fixed” before any customers saw it. Sigh.

Fast forward to 2019, and we’re still challenged with the interplay between first-party and third-party experiences. Outside of direct invocation, the ability to discover or link to third-party voice experiences through voice is somewhat limited. There are current techniques and efforts trying to help with this, including establishing direct connections, platform methods to route from first to third party, or manifesting first-party customer experiences on behalf of brands:

- On the third-party side, we’ve been using marketing to educate the customer on how to invoke our Action/ Skill to connect users directly to our Action and Skill — a non-technical solution to entice customers to invoke the third-party experience

- Other third-party voice apps are using the in-skill experience to get users to directly invoke/ deep link into adjacent skills, doing an end-around on the first-party experience. A good example of this is the Song Quiz Skill. It plays an informative trailer prompting the user to say an invocation phrase of a sister skill (“Alexa, open volley.fm”) when the stop intent is invoked.

- Alexa introduced CanFulfillIntentRequest (beta) to “increase discoverability of your skill through name-free interaction”. App developers enable the capability by including name-free invocations in their application manifests, ensure their skill has the ability to handle the intents, and then rely on Amazon to choose the correct user treatment out of a list of candidate skills that might be able to handle the user’s request.

- As mentioned in the article, Google Assistant seems to be utilizing other services (Wikipedia, Yelp, etc.) to connect users to knowledge, but that has limited third-party benefits…it doesn’t exactly drive the user to the third-party voice application, and the app owner or brand usually doesn’t own the customer experience

Enter the web and schema.org

The key for linking first-party to third-party experiences for brands may lie not in their voice apps, but on their websites. schema.org was created to improve the web and make it easier for search engines and users to find relevant information. However, there’s no reason we can’t use and extend the conventions established by schema.org to make first-party experiences better and seamlessly connect them to third-party voice applications.

While I wish I could take credit for developing entirely new methods to solve this problem, the concept isn’t new. In fact, Google seems to be testing the schema.org speakable property as a solution to allow voice app publishers to surface appropriate content to be read aloud on Google Assistant…a first step at integrating first and third party voice applications.

Examples

So…using the web to connect voice applications? Let’s take a look at two examples. First, a scenario that should be in play in the near-term to surface content in voice. Second, a hypothetical future-state proposal that would surface content and provide a bridge from first-party to third-party experiences. For both, we’re going to assume that the major voice players have systems in place to consume schema.org metadata via our web pages.

Example 1: Enable brands/ voice apps to provide basic first-party responses through schema.org speakable (near-term solution, Google “beta” recommendation)

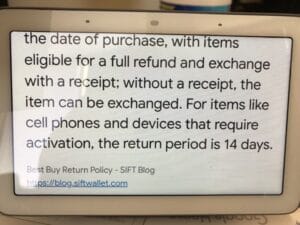

As outlined in Mark’s article, voice conversations are starting in first-party experiences and the platforms are controlling the responses, often times sourcing answers from places like Wikipedia or Yelp. Take, for example, what happens when I ask Google Assistant, “Hey Google, what is Best Buy’s return policy?”

Google Assistant has an answer for me, but it’s coming from Siftwallet, a source I haven’t heard of. While Siftwallet is probably close on the return policy information, Best Buy and it’s Google Action should be the source of record on any policy information returned to the customer. If members from Best Buy’s legal team are reading this, it’s time to start the fire drill 🙂

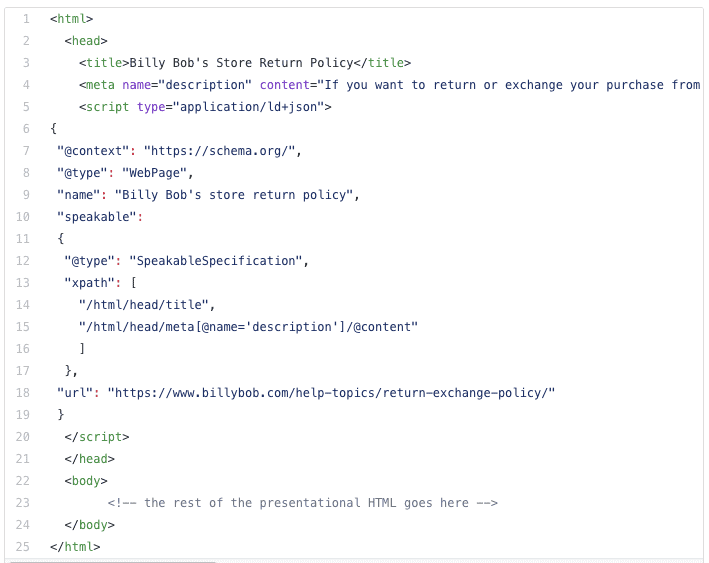

So let’s say Billy Bob’s Store wants to avoid the same pitfall as Best Buy and own the first-party version of their return policy. Billy Bob’s webmaster could implement a short voice-friendly meta description of their return policy with the schema.org speakable JSON-LD markup specification, and publish it in the HTML of the customer-facing return policy web page. Doing this would notimpact the web user’s experience, but it would give Google Assistant a speakable source of truth for Billy Bob’s return policy.

- schema.org Speakable JSON-LD markup

In our code, we use JSON-LD to indicate the location of the speakable content of the webpage which contains the desired voice-friendly response for use by a first party. This particular example uses XPath, but the standard also supports CSS selectors. After these minor additions, a voice user interaction might sound something like this:

Jane: “Hey Google, what is Billy Bob’s return policy?”

Google Assistant (first-party): “According to Billy Bob’s website: If you want to return or exchange your purchase from Billy Bob’s store, the time period begins the day you receive your product…”

Great! By adding the schema.org speakable specification to Billy Bob’s return policy page HTML source, Billy Bob’s return policy content is returned — Billy Bob’s Store actually owns the message — so there’s no ambiguity on who’s providing the information.

I think it’s also worth exploring using additional schema.org markup beyond this simple example. The standard is rich with vocabularies that define all sorts of things, from products and offers to how-to instructions and events. I can imagine a scenario where voice platforms enable “voice knowledge panels” similar to Google’s knowledge panel on the web where a first-party voice treatment is owned by the platform, but the knowledge is defined by the brand owner through schema.org JSON-LD.

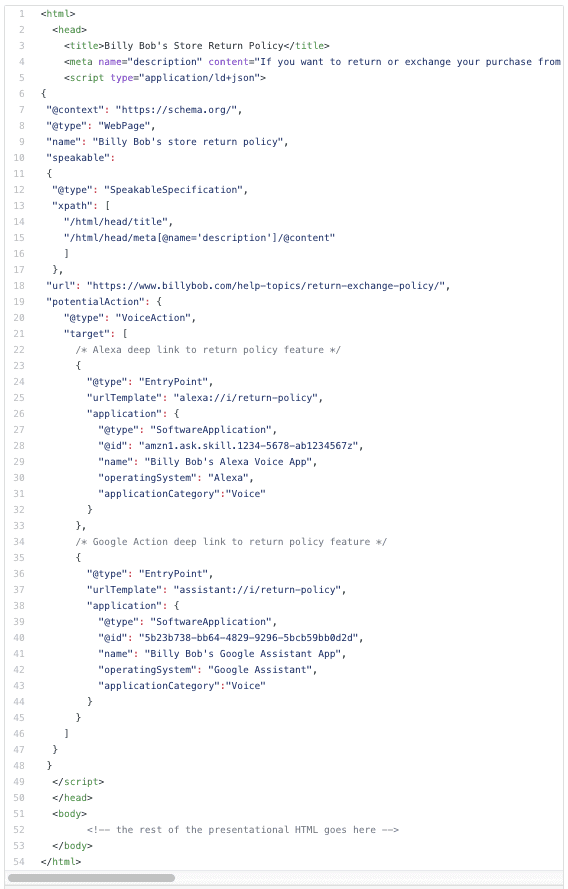

Example 2: Future schema.org based methods where the first-party experience can send the user to the appropriate third-party voice Action or Skill (hypothetical)

While surfacing brand-owned content in first-party voice is a good step, one major beef I have with both Assistant and Alexa is the lack of a clear linking strategy to bridge the gap between first and third party. The value of a third-party experience is greatly diminished if a user can’t be connected to it. Alexa is attempting to fix this with CanFulfillIntentRequest, but it requires the skill developer to do a lot of the heavy lifting in their skill manifest. There should be a more organic way to discover and connect to third-party Skills and Actions.

We may be able to lean on schema.org conventions to solve for this. schema has the concept of a potentialAction, used for describing the capability to perform an action, and how it could be acted upon. There are a few existing action types — WatchAction for Movie types which provide a video resource link, BuyAction for linking to transactional capabilities, or ViewAction that defines deep links into a web site or mobile app.

I would propose the creation of a new Action type called VoiceAction that provides an EntryPoint link from first-party to third-party voice apps. This would also require Assistant and Alexa platforms to define deep linking schemes to identify the route to connect the user to the correct third-party voice app. Linking would be done by web devs publishing VoiceActions on their web pages, and voice platforms would consume the VoiceActions and deep link voice users to the appropriate third party experience.

- schema.org JSON-LD “VoiceAction” markup to include links to Alexa and Google Assistant

This example is a little more complex. We extend the first code sample and include two VoiceAction target directives to indicate support for voice linking to Google Assistant and Alexa, and provide the correct voice app identifiers and paths for utilization by the platform. By adding this additional markup, a hypothetical first-party user experience might sound a little like this:

Jane: “Hey Google, what is Billy Bob’s return policy?”

Google Assistant (first-party): “According to Billy Bob’s website: If you want to return or exchange your purchase from Billy Bob’s store, the time period begins the day you receive your product. Can I take you to Billy Bob’s Store?”

Jane: “Yes, please”

Billy Bob’s Store Action: “Welcome to Billy Bob’s, can I help you with a product return?”

Final Thoughts

I’ll admit I am an early supporter and adopter of schema.org as foundational building blocks for a smarter internet. In these hypothetical examples, we are “feeding two birds with one scone” — expanding and promoting schemas for structured data on the web while providing voice users with better experiences through data.

We continue to develop foundational elements of voice, including third-party app discovery and linking (can we call it Voice SEO? ????). Moving forward on the aforementioned methods would require actions on both sides. On the platform side (Google Assistant, Alexa, Bixby, Apple, et al), orgs would need to adopt the standards and index or otherwise reference the web pages associated with Actions and Skills. Third party app devs would need to get cozy with their web development counterparts and have comprehensive metadata strategies that span all channels.